In this post, I’ll analyze my Google Calendar events to see exactly how much time I spend in meetings each week.

Does it seem like moving to be remote has increased the amount of time we spend in meetings? To find out, I’ll use the Google Calendar API to easily extract all my calendar events and analyze the data.

To start, you will have to follow the directions here to Enable the Google Calendar API. Once you have authenticated your account, we are ready to get started. Here are the packages we need:

# For Charting import pandas as pd import plotly.graph_objects as go import plotly.io as pio import plotly.express as px #Google API authentication from __future__ import print_function import datetime import pickle import os.path from googleapiclient.discovery import build from google_auth_oauthlib.flow import InstalledAppFlow from google.auth.transport.requests import Request

Next, we can grab our calendar events. I’ve slightly modified the code from Step 3 of the Getting Started page. There are two things you may want to change:

- Line 26 is where you choose how far back you want to go to get your calendar invites.

- Line 30 determines how many results to return. I set it at 1,000 to get everything.

# If modifying these scopes, delete the file token.pickle.

SCOPES = ['https://www.googleapis.com/auth/calendar.readonly']

creds = None

# The file token.pickle stores the user's access and refresh tokens, and is

# created automatically when the authorization flow completes for the first

# time.

if os.path.exists('token.pickle'):

with open('token.pickle', 'rb') as token:

creds = pickle.load(token)

# If there are no (valid) credentials available, let the user log in.

if not creds or not creds.valid:

if creds and creds.expired and creds.refresh_token:

creds.refresh(Request())

else:

flow = InstalledAppFlow.from_client_secrets_file(

'credentials.json', SCOPES)

creds = flow.run_local_server(port=0)

# Save the credentials for the next run

with open('token.pickle', 'wb') as token:

pickle.dump(creds, token)

service = build('calendar', 'v3', credentials=creds)

# Call the Calendar API

now = datetime.datetime(2020,6,1).isoformat() + 'Z' # 'Z' indicates UTC time

events_result = service.events().list(calendarId='primary',

timeMin=now,

maxResults=1000, singleEvents=True,

orderBy='startTime').execute()

events = events_result.get('items', [])

if not events:

print('No upcoming events found.')

for event in events:

summary = event['summary']

start = event['start'].get('dateTime', event['start'].get('date'))

end = event['end'].get('dateTime', event['end'].get('date'))Now all the events are stored as JSON in a variable called events. To take a look at one event, run events[0].

There are a lot of data points for each event. In the next step, I’ll put the data into a DataFrame and isolate the fields I need for this analysis.

calendar_events_df = pd.DataFrame(events)[['summary','start','end']]

We then have to break up the dictionary in the columns:

nested_columns = ['start','end']

for i in nested_columns:

temp_df = calendar_events_df[i].apply(pd.Series).iloc[:,0].rename(i)

calendar_events_df = pd.concat([calendar_events_df.drop([i],axis=1),temp_df],axis=1)Next, we will convert the date columns:

date_columns = ['start','end']

for i in date_columns:

calendar_events_df[i] = pd.to_datetime(calendar_events_df[i], utc=True).dt.tz_convert(None) #convert time zone aware date to non-timezone awareNow we have the start and end dates formatted and can move on to calculating the duration of each event. I’m essentially subtracting the dates, returning the seconds, then converting those into minutes, and then finally into hours:

calendar_events_df['Difference_seconds'] = (calendar_events_df['end'] - calendar_events_df['start']).dt.seconds calendar_events_df['Difference_minutes'] = calendar_events_df['Difference_seconds']/60 calendar_events_df['Difference_hours'] = calendar_events_df['Difference_minutes']/60

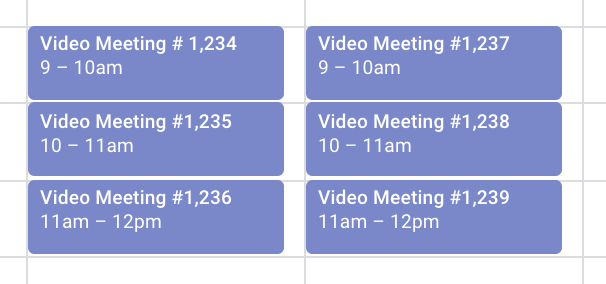

At this point, our DataFrame should look something like this:

Now I am going to apply a filter to remove some events that are not meetings. Sometimes on my calendar, I’ll block off large chunks of time to prevent a meeting from being scheduled, for out of offices, and travel itineraries. In my case, it’s usually anything longer than an hour.

filt = (calendar_events_df['start'] <= datetime.datetime.now()) & (calendar_events_df['Difference_hours'] <=1) calendar_events_df_filtered = calendar_events_df.loc[filt]

The last data transformation is going to be to aggregate the data by week and sum the duration.

df = calendar_events_df_filtered.groupby(pd.Grouper(key='start',freq='W-SUN')).sum().reset_index()

Finally, we are ready to plot the data to see what the data looks like. My x-axis is going to be the week. My y-axis is going to be total hours in meetings divided by 40 and will represent the percentage of time spent in meetings. I am using Plotly for my visualizations.

df = calendar_events_df_filtered.groupby(pd.Grouper(key='start',freq='W-SUN')).sum().reset_index()

fig = go.Figure([go.Scatter(x=df['start'],

y=df['Difference_hours']/40, #40 working hours per week

hovertemplate='<b>%{y:.0%}</b>')])

fig.update_layout(template='none', title='Paul Time spent in Meetings each week', title_x=0.05, yaxis=dict(range=[0, 0.50],tickformat=",.0%"))

fig.show()After all that it looks like it’s anywhere between 30-40% of my time is in meetings. Is that a lot? I’ll let you (or my manager) determine that one. Thanks for reading!