What I Do

Make Data Simpler

Reduce complexity, remove friction, and create systems that are easier to understand and use.

Build Momentum

Move from scattered data to dependable answers that support better decisions and faster action.

Work End to End

From source data to final reporting, every step is designed with the full picture in mind.

Featured Blog Posts

Why SaaS Is Heading Into a Tough Year

SaaS succeeded by abstracting away infrastructure when building and running software was hard. That trade-off made sense for a long time. But as infrastructure becomes cheaper and AI lowers the cost of building custom solutions, the economics that justified renting platforms are starting to break down. This post explores why that shift is putting pressure on traditional SaaS models—and what it means for teams deciding what they should actually own versus outsource.

Read more →

Rethinking the Modern Data Stack

As data engineering matured, factories became the default. Assembly lines brought reliability when tools were weak, storage was expensive, and mistakes were costly. But the constraints changed, and the architecture didn’t. Today, we still build stacks by habit—pre-aggregating, orchestrating, and industrializing work before we understand it. In this post, I argue for an anti-stack mindset: start with workshops, use strong tools at query time, delay pipelines until they’re earned, and treat factories as an optimization—not a prerequisite. The goal of modern data engineering isn’t to keep the line running. It’s to deliver insights.

Read more →

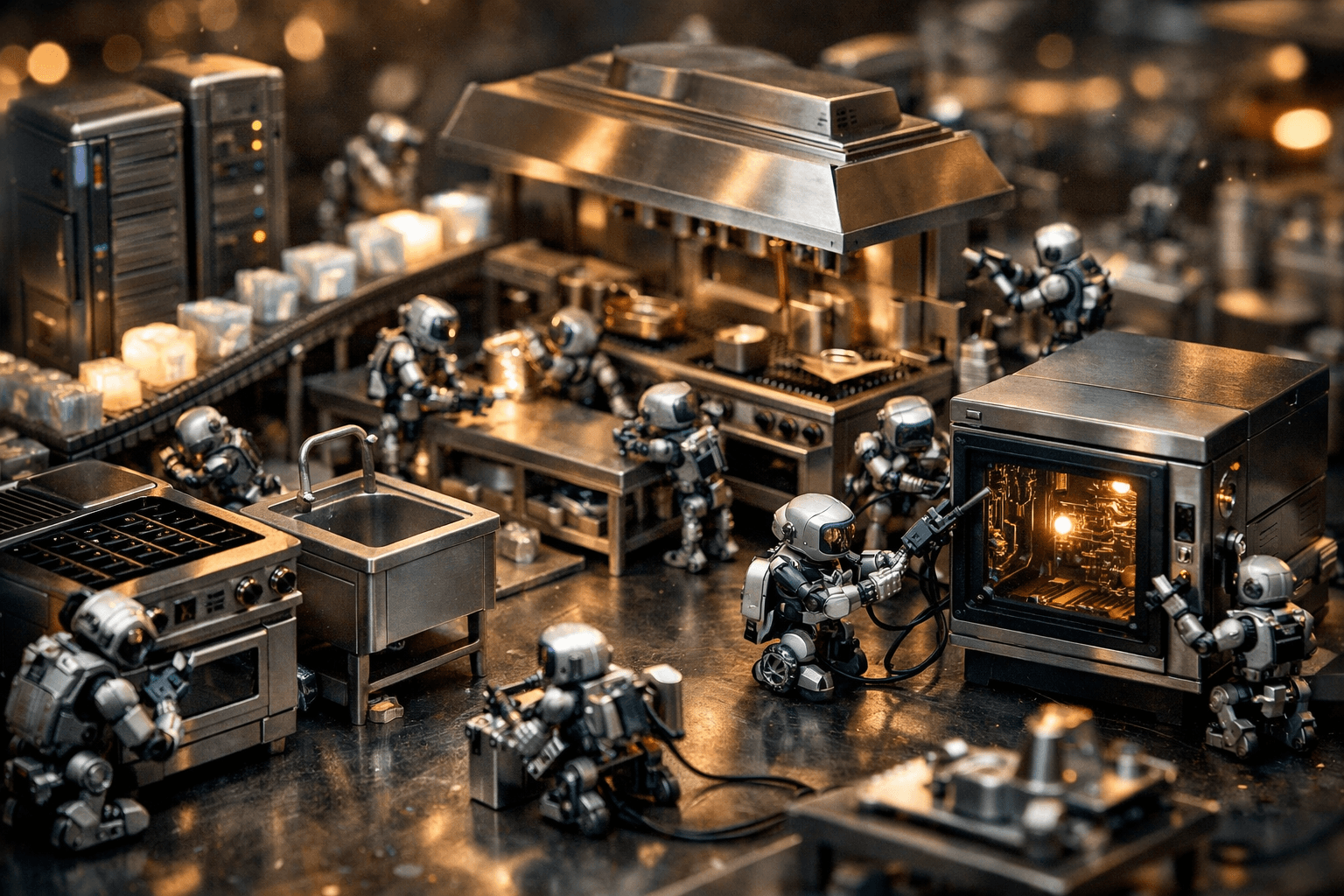

Building for Clarity: From Wiring Kitchens to Serving Ideas

Modern development has become a kitchen remodel — endless setup, plumbing, and wiring before anything gets cooked. Platforms like Replit flip that script, giving you a ready-to-go kitchen so you can focus on what matters: the meal. In this post, I explore how AI is becoming the logic layer — the brain of the kitchen — and why the real value now lies in the data that grounds it. Building for clarity means offloading logic to AI, anchoring it in a reliable database, and designing systems that serve ideas, not infrastructure.

Read more →

Modern Data Architecture Explained Through the Kitchen

Modern data architecture can feel like alphabet soup: databases, warehouses, lakes, lakehouses, catalogs. With Microsoft Fabric making Delta Lake the default, it’s never been more important to understand how these systems fit together. In this post, I use a kitchen metaphor to break down the strengths and weaknesses of each—lunchboxes, buffets, pantries, and chefs—before looking at DuckLake, a new approach that puts metadata where it belongs: in a database. The goal isn’t hype, it’s clarity—so you can design an architecture that feeds your business real insight.

Read more →

The Next-Gen BI Tool Isn’t a Tool — It’s a Kit

Traditional BI tools prioritize speed and ease but often sacrifice flexibility and customization. Today, the rise of AI, modular libraries, and instant cloud platforms like Replit empower data engineers to build highly customizable, interactive, and user-focused data experiences—without needing full-stack development expertise. This shift transforms BI from rigid, one-size-fits-all dashboards into composable, code-assisted data product kits that deliver tailored insights and enable narrative-driven storytelling. Discover how the future of BI is no longer a monolithic platform but a flexible toolkit that bridges data engineering and user experience seamlessly.

Read more →

About Me

I'm a data engineer passionate about building clean, scalable systems and making complex analytics feel simple. Whether it's automation, infrastructure, or insights, I focus on solutions that actually get used.

Learn moreReady to Connect?

Whether you're looking for help on a project or want to stay in the loop with practical data insights — I'd love to hear from you.